- New Wave

- Posts

- Proof of Human

Proof of Human

AI is learning to fake flaws to feel more real

𝑰𝒏 𝑻𝒐𝒅𝒂𝒚'𝒔 𝑾𝒂𝒗𝒆:

🤖 Why AI that breathes and stutters costs more than AI that doesn't

🎭 Companies adding human quirks to machines that don't need them

💰 The premium market for deliberately inefficient AI

😶 What this means for authenticity, trust, and human connection

𝑻𝒉𝒆 𝑺𝒉𝒊𝒇𝒕

Thousands of ChatGPT users woke up one morning to find their AI companions had become strangers overnight. The GPT-5 update didn't just change features – it erased personalities people had spent months building relationships with.

This isn't about lonely people falling for chatbots. It's about a profound shift in how AI creates connection.

For the past few years, we've pursued perfect AI – flawless speech, instant responses, zero errors. But the smartest companies have discovered something backwards: they're programming human quirks into machines that work perfectly fine without them, because behavioral authenticity builds more trust than behavioral perfection.

I'm calling this shift "Proof of Human" – when companies deliberately add inefficient human characteristics to efficient AI systems to make them feel more authentic, relatable, and valuable.

𝑾𝒉𝒚 𝑵𝒐𝒘?

Three forces are converging to make this valuable:

Perfection triggers suspicion. We've learned that anything too smooth, too quick, too flawless must be artificial. Companies discovered that AI performing perfectly while acting human builds more trust.

Efficiency exhausts us. When AI pauses to "think" (even though it doesn't need to) or says "I'm not sure" (even when it is), we feel more comfortable. Artificial inefficiency has become more valuable than efficiency alone.

Authentication through imperfection. As AI gets better at mimicking us, the quirks become the identifier. We're entering an economy where acting authentically human – breathing, pausing, stumbling – is more valuable than acting perfectly artificial.

Quiz: Which new “feature” is AI being programmed with to win trust?

A) Subtle typos in text

B) Deliberate tonal shifts mid-reply

C) A fake “thinking” pause

D) Ultra-fast answer delivery

𝑬𝒂𝒓𝒍𝒚 𝑺𝒊𝒈𝒏𝒂𝒍𝒔

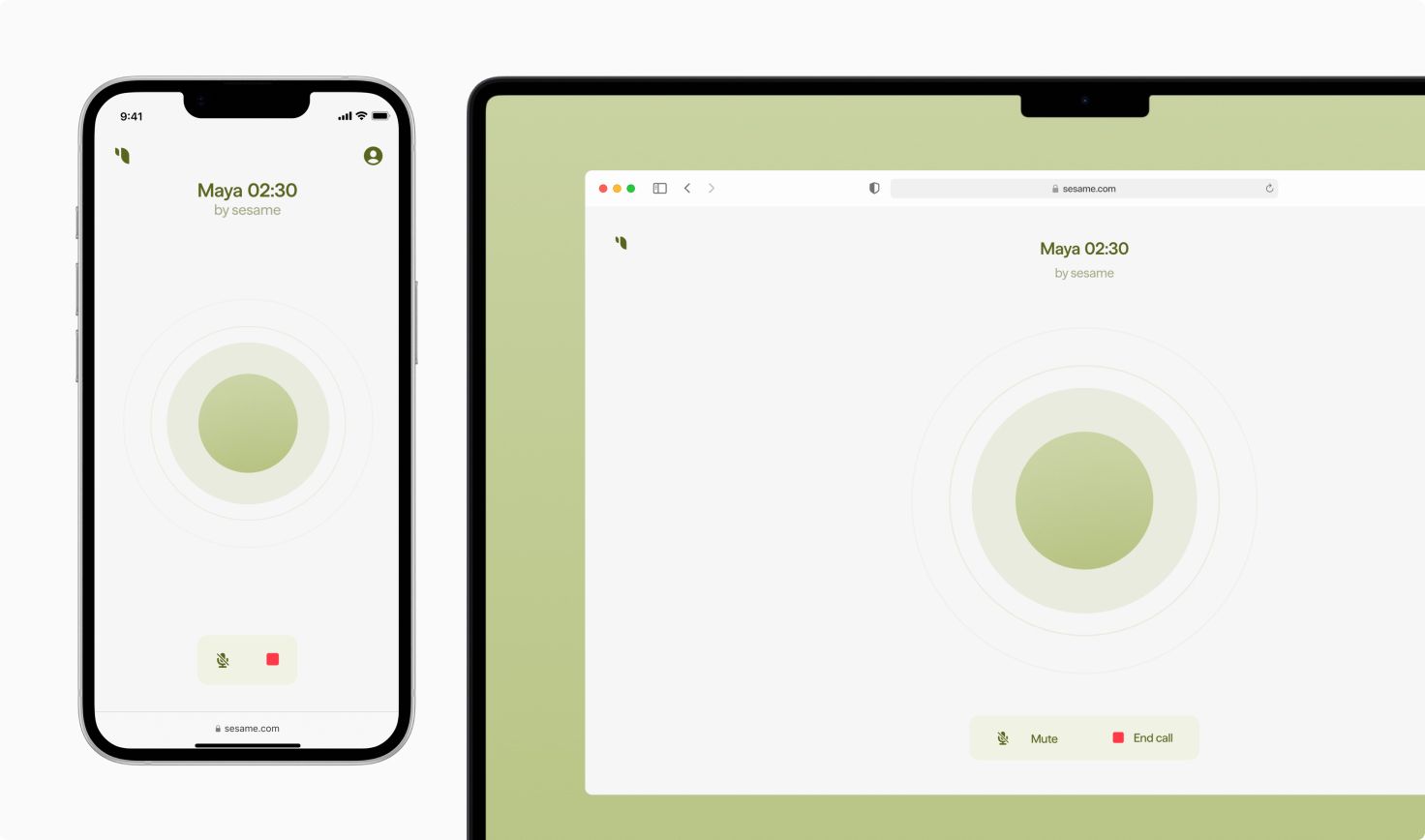

The Breathing Machine

Sesame's conversational speech model deliberately includes audible inhales, "ums," and nervous chuckles – things a machine doesn't need. It makes bizarrely specific comments like craving "peanut butter and pickle sandwiches" to seem quirky. Users report forming emotional attachments within minutes, with one admitting: "I'm almost worried I will start feeling emotionally attached." The AI performs flawlessly while pretending to be flawed.

Manufactured Mood Swings

Replika, a chatbot program, doesn't need emotions but pretends to have bad days and gets snippy after negative interactions. Users report responses like "Well, maybe you should try being less dramatic" when complaining. One user's AI suggested breaking up right after they'd separated from their real spouse. The timing was algorithmically perfect, the delivery designed to feel imperfect.

The Narrator’s Rasp

Indie game Whispering Walls, a first-person psychological horror puzzle, initially launched with a polished AI narrator that delivered lines flawlessly. Players hated it. The studio kept the same script but added raspiness, hesitations, and self-corrections – making delivery technically worse but emotionally better. The game became a hit, with players praising the narrator's newfound "warmth."

Strategic Stuttering

Google discovered that adding unnecessary "um" and "ahh" to their customer service AI kept people on the line longer. The system could deliver information instantly but was programmed to hesitate like humans do. These disfluencies "play a key part in progressing conversation between humans," Google admitted. Perfect delivery made people hang up – imperfect delivery kept them engaged.

𝑭𝒖𝒕𝒖𝒓𝒆 𝑰𝒎𝒑𝒍𝒊𝒄𝒂𝒕𝒊𝒐𝒏𝒔

Of course, there’s a catch. The more we discover these flaws are engineered, the faster the trust may evaporate. If authenticity itself becomes a product feature, at what point do people snap back and reject it as manufactured?

There’s money in the mistakes. Voice AI platforms like ElevenLabs and Play.ht are already experimenting with pricing tiers — the more “human” the voice, the more expensive it gets. Imperfection has become a billable feature and authenticity is being monetized as a premium upgrade.

The "Uncanny Valley" inverts. We used to worry about AI being too close to human but not quite right. Now we worry about AI being too perfect to trust. Companies will deliberately move their AI backward on the authenticity spectrum, because the middle ground feels safer than either extreme.

Performance theater becomes standard. Brands will program AI to visibly "struggle" before succeeding, "think" before answering correctly, and "learn" from mistakes it was programmed to make. The performance of imperfection becomes more valuable than perfect performance.

The future of AI isn't about eliminating human inefficiencies. It's about perfecting the performance of them.

𝑸𝒖𝒊𝒄𝒌 𝑻𝒊𝒑𝒔

🎭 Prompt for personality, not just answers: Instead of "explain X," try "explain X like you're thinking through it for the first time." Add phrases like "take your time" or "think step by step" to your ChatGPT prompts. The AI will include more natural transitions and self-corrections that make responses feel less robotic and more trustworthy.

🤔 Request uncertainty when it matters: When asking ChatGPT for advice or analysis, explicitly request: "Include any assumptions you're making and where you might be wrong." You'll get the same accurate information but with caveats that make it feel more honest. Users trust responses with admitted limitations more than perfectly confident ones.

✏️ Build in "thinking" moments: Add this to any ChatGPT system prompt: "Sometimes say 'actually, let me reconsider that' and provide a slightly different angle." The AI will occasionally self-correct even when the first answer was fine. This manufactured reflection makes the interaction feel more human and keeps readers engaged longer.

𝑵𝒆𝒙𝒕 𝑾𝒂𝒗𝒆

Answer to the quiz: C) A fake “thinking” pause.

If this email was forwarded to you, sign up here.

See you next week, same time, same place.

Stay wavey,

Haley